It started with an argument over an obscure French word that sounds more distinguished than its actual meaning.

How do you pronounce “chassis”?

It’s “chas-ee.”

No, it’s “chas-is”.

We looked it up. It’s “chas-ee.” But “shah-see” in original French.

Anyways, unless you’re a car lover (or apparently a rifle enthusiast), the word is likely not part of your everyday vocabulary. It wasn’t in ours before this debate.

For something that sounds so foreign, it’s actually a pretty basic concept. Every vehicle on the road - from a humble Beetle to a 16-wheeler - has a chassis. The chassis is the load-bearing structure that everything else bolts onto: the engine, the body, the wheels. Without it, all you have is a disconnected pile of parts.

In the same way every vehicle has a chassis, we believe every meaningful application of AI will have an underlying, modular structure that can easily bolt on different enterprise functions.

While software frameworks have been around forever, they are often tied to a specific purpose and programming language. For example, Spring Boot helps developers build individual web applications in Java.

The use case possibilities with AI transcend the flexibility and reach of typical software solutions. Now, every workflow in every company, across every industry, has the potential for augmentation.

Of course, not everything is a worthwhile market for current AI solutions. But with the arrival of this universal, chassis-like structure, it won’t have to be. Companies may initially purchase an AI application to solve one pressing problem, only to find that when plugged into different integrations, it can solve several others!

Today, AI applications are often custom-built, like one-off concept cars. A startup in healthcare builds their own architecture. A fintech company designs a completely different pipeline. A logistics platform reinvents another stack. All brilliant, all different - but often duplicating the foundational work each time.

The future of enterprise AI will not be built on better models (sorry GPT-5). It will be created with a repeatable, modular structure - a chassis - that can support AI across different industries, use cases, and contexts.

That’s the real paradigm shift. Not dozens of siloed experiments, but a standard structure beneath the hood that enables acceleration of AI adoption everywhere.

To be sure, LLMs are still incredibly important. GPT, Claude, Gemini, and LLaMA, when enhanced with Retrieval Augmented Generation (RAG), are like engines. They are central sources of power, but not the whole vehicle. You wouldn’t drop an F1 engine on the ground and call it a car.

The AI chassis is what surrounds and channels the power of the model to create a true system of action. These are some of the basic components, the structural frame, that make up an AI application:

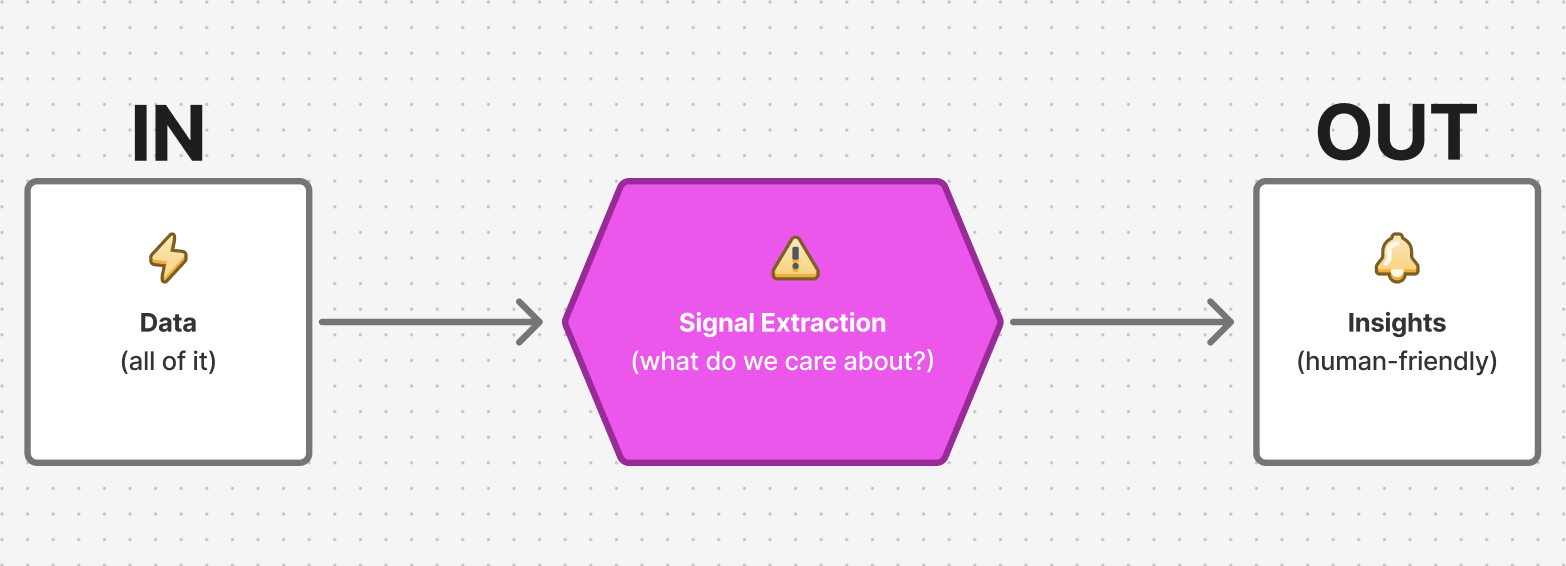

We recognized this firsthand when building an AI-native product for B2B account management at Kaboom. Even something as complex and human-driven as expansion sales can be augmented with a basic process, one that AI does extremely well:

In practice, this would become a universal toolkit: companies grab the chassis, snap in their industry or function specific modules, and drive.

The same way carmakers use standardized chassis designs across models (Volkswagen famously built multiple cars on the same platform), companies will be able to build countless AI solutions on top of a pre-established architecture.

Every vehicle needs a chassis. And so will every AI application.

The question isn’t whether enterprise AI adoption will happen - that’s already a given. The question is whether developers will build it the hard way, again and again, or whether a configurable structure will emerge that everyone can ride on to greater business outcomes.

That’s the opportunity. The groundwork for an AI solution every enterprise can use.

What do you think? Is this a distant future or a near reality?